Machine Learning Street Talk (MLST)

By Machine Learning Street Talk (MLST)

Machine Learning Street Talk (MLST)Jan 28, 2023

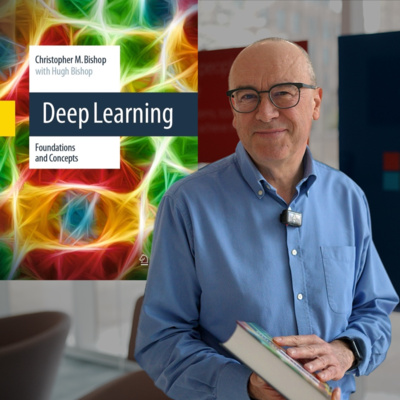

Prof. Chris Bishop's NEW Deep Learning Textbook!

Professor Chris Bishop is a Technical Fellow and Director at Microsoft Research AI4Science, in Cambridge. He is also Honorary Professor of Computer Science at the University of Edinburgh, and a Fellow of Darwin College, Cambridge. In 2004, he was elected Fellow of the Royal Academy of Engineering, in 2007 he was elected Fellow of the Royal Society of Edinburgh, and in 2017 he was elected Fellow of the Royal Society. Chris was a founding member of the UK AI Council, and in 2019 he was appointed to the Prime Minister’s Council for Science and Technology.

At Microsoft Research, Chris oversees a global portfolio of industrial research and development, with a strong focus on machine learning and the natural sciences.

Chris obtained a BA in Physics from Oxford, and a PhD in Theoretical Physics from the University of Edinburgh, with a thesis on quantum field theory.

Chris's contributions to the field of machine learning have been truly remarkable. He has authored (what is arguably) the original textbook in the field - 'Pattern Recognition and Machine Learning' (PRML) which has served as an essential reference for countless students and researchers around the world, and that was his second textbook after his highly acclaimed first textbook Neural Networks for Pattern Recognition.

Recently, Chris has co-authored a new book with his son, Hugh, titled 'Deep Learning: Foundations and Concepts.' This book aims to provide a comprehensive understanding of the key ideas and techniques underpinning the rapidly evolving field of deep learning. It covers both the foundational concepts and the latest advances, making it an invaluable resource for newcomers and experienced practitioners alike.

Buy Chris' textbook here:

https://amzn.to/3vvLcCh

More about Prof. Chris Bishop:

https://en.wikipedia.org/wiki/Christopher_Bishop

https://www.microsoft.com/en-us/research/people/cmbishop/

Support MLST:

Please support us on Patreon. We are entirely funded from Patreon donations right now. Patreon supports get private discord access, biweekly calls, early-access + exclusive content and lots more.

https://patreon.com/mlst

Donate: https://www.paypal.com/donate/?hosted_button_id=K2TYRVPBGXVNA

If you would like to sponsor us, so we can tell your story - reach out on mlstreettalk at gmail

TOC:

00:00:00 - Intro to Chris

00:06:54 - Changing Landscape of AI

00:08:16 - Symbolism

00:09:32 - PRML

00:11:02 - Bayesian Approach

00:14:49 - Are NNs One Model or Many, Special vs General

00:20:04 - Can Language Models Be Creative

00:22:35 - Sparks of AGI

00:25:52 - Creativity Gap in LLMs

00:35:40 - New Deep Learning Book

00:39:01 - Favourite Chapters

00:44:11 - Probability Theory

00:45:42 - AI4Science

00:48:31 - Inductive Priors

00:58:52 - Drug Discovery

01:05:19 - Foundational Bias Models

01:07:46 - How Fundamental Is Our Physics Knowledge?

01:12:05 - Transformers

01:12:59 - Why Does Deep Learning Work?

01:16:59 - Inscrutability of NNs

01:18:01 - Example of Simulator

01:21:09 - Control

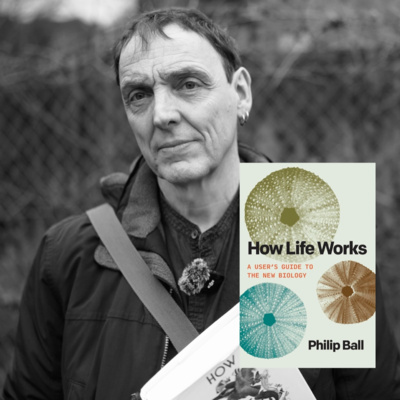

Philip Ball - How Life Works

Dr. Philip Ball is a freelance science writer. He just wrote a book called "How Life Works", discussing the how the science of Biology has advanced in the last 20 years. We focus on the concept of Agency in particular.

He trained as a chemist at the University of Oxford, and as a physicist at the University of Bristol. He worked previously at Nature for over 20 years, first as an editor for physical sciences and then as a consultant editor. His writings on science for the popular press have covered topical issues ranging from cosmology to the future of molecular biology.

YT: https://www.youtube.com/watch?v=n6nxUiqiz9I

Transcript link on YT description

Philip is the author of many popular books on science, including H2O: A Biography of Water, Bright Earth: The Invention of Colour, The Music Instinct and Curiosity: How Science Became Interested in Everything. His book Critical Mass won the 2005 Aventis Prize for Science Books, while Serving the Reich was shortlisted for the Royal Society Winton Science Book Prize in 2014.

This is one of Tim's personal favourite MLST shows, so we have designated it a special edition. Enjoy!

Buy Philip's book "How Life Works" here: https://amzn.to/3vSmNqp

Support MLST: Please support us on Patreon. We are entirely funded from Patreon donations right now. Patreon supports get private discord access, biweekly calls, early-access + exclusive content and lots more. https://patreon.com/mlst Donate: https://www.paypal.com/donate/?hosted... If you would like to sponsor us, so we can tell your story - reach out on mlstreettalk at gmail

Dr. Paul Lessard - Categorical/Structured Deep Learning

Dr. Paul Lessard and his collaborators have written a paper on "Categorical Deep Learning and Algebraic Theory of Architectures". They aim to make neural networks more interpretable, composable and amenable to formal reasoning. The key is mathematical abstraction, as exemplified by category theory - using monads to develop a more principled, algebraic approach to structuring neural networks.

We also discussed the limitations of current neural network architectures in terms of their ability to generalise and reason in a human-like way. In particular, the inability of neural networks to do unbounded computation equivalent to a Turing machine. Paul expressed optimism that this is not a fundamental limitation, but an artefact of current architectures and training procedures.

The power of abstraction - allowing us to focus on the essential structure while ignoring extraneous details. This can make certain problems more tractable to reason about. Paul sees category theory as providing a powerful "Lego set" for productively thinking about many practical problems.

Towards the end, Paul gave an accessible introduction to some core concepts in category theory like categories, morphisms, functors, monads etc. We explained how these abstract constructs can capture essential patterns that arise across different domains of mathematics.

Paul is optimistic about the potential of category theory and related mathematical abstractions to put AI and neural networks on a more robust conceptual foundation to enable interpretability and reasoning. However, significant theoretical and engineering challenges remain in realising this vision.

Please support us on Patreon. We are entirely funded from Patreon donations right now.

https://patreon.com/mlst

If you would like to sponsor us, so we can tell your story - reach out on mlstreettalk at gmail

Links:

Categorical Deep Learning: An Algebraic Theory of Architectures

Bruno Gavranović, Paul Lessard, Andrew Dudzik,

Tamara von Glehn, João G. M. Araújo, Petar Veličković

Paper: https://categoricaldeeplearning.com/

Symbolica:

https://twitter.com/symbolica

https://www.symbolica.ai/

Dr. Paul Lessard (Principal Scientist - Symbolica)

https://www.linkedin.com/in/paul-roy-lessard/

Interviewer: Dr. Tim Scarfe

TOC:

00:00:00 - Intro

00:05:07 - What is the category paper all about

00:07:19 - Composition

00:10:42 - Abstract Algebra

00:23:01 - DSLs for machine learning

00:24:10 - Inscrutibility

00:29:04 - Limitations with current NNs

00:30:41 - Generative code / NNs don't recurse

00:34:34 - NNs are not Turing machines (special edition)

00:53:09 - Abstraction

00:55:11 - Category theory objects

00:58:06 - Cat theory vs number theory

00:59:43 - Data and Code are one in the same

01:08:05 - Syntax and semantics

01:14:32 - Category DL elevator pitch

01:17:05 - Abstraction again

01:20:25 - Lego set for the universe

01:23:04 - Reasoning

01:28:05 - Category theory 101

01:37:42 - Monads

01:45:59 - Where to learn more cat theory

Can we build a generalist agent? Dr. Minqi Jiang and Dr. Marc Rigter

Dr. Minqi Jiang and Dr. Marc Rigter explain an innovative new method to make the intelligence of agents more general-purpose by training them to learn many worlds before their usual goal-directed training, which we call "reinforcement learning". Their new paper is called "Reward-free curricula for training robust world models" https://arxiv.org/pdf/2306.09205.pdf https://twitter.com/MinqiJiang https://twitter.com/MarcRigter Interviewer: Dr. Tim Scarfe Please support us on Patreon, Tim is now doing MLST full-time and taking a massive financial hit. If you love MLST and want this to continue, please show your support! In return you get access to shows very early and private discord and networking. https://patreon.com/mlst We are also looking for show sponsors, please get in touch if interested mlstreettalk at gmail. MLST Discord: https://discord.gg/machine-learning-street-talk-mlst-937356144060530778

Prof. Nick Chater - The Language Game (Part 1)

Nick Chater is Professor of Behavioural Science at Warwick Business School, who works on rationality and language using a range of theoretical and experimental approaches. We discuss his books The Mind is Flat, and the Language Game.

Please support me on Patreon (this is now my main job!) - https://patreon.com/mlst - Access the private Discord, networking, and early access to content.

MLST Discord: https://discord.gg/machine-learning-street-talk-mlst-937356144060530778

https://twitter.com/MLStreetTalk

Buy The Language Game:

https://amzn.to/3SRHjPm

Buy The Mind is Flat:

https://amzn.to/3P3BUUC

YT version: https://youtu.be/5cBS6COzLN4

https://www.wbs.ac.uk/about/person/nick-chater/

https://twitter.com/nickjchater?lang=en

Kenneth Stanley created a new social network based on serendipity and divergence

See what Sam Altman advised Kenneth when he left OpenAI! Professor Kenneth Stanley has just launched a brand new type of social network, which he calls a "Serendipity network". The idea is that you follow interests, NOT people. It's a social network without the popularity contest. We discuss the phgilosophy and technology behind the venture in great detail. The main ideas of which came from Kenneth's famous book "Why greatness cannot be planned".

See what Sam Altman advised Kenneth when he left OpenAI! Professor Kenneth Stanley has just launched a brand new type of social network, which he calls a "Serendipity network".The idea is that you follow interests, NOT people. It's a social network without the popularity contest.

YT version: https://www.youtube.com/watch?v=pWIrXN-yy8g

Chapters should be baked into the MP3 file now

MLST public Discord: https://discord.gg/machine-learning-street-talk-mlst-937356144060530778 Please support our work on Patreon - get access to interviews months early, private Patreon, networking, exclusive content and regular calls with Tim and Keith. https://patreon.com/mlst Get Maven here: https://www.heymaven.com/ Kenneth: https://twitter.com/kenneth0stanley https://www.kenstanley.net/home Host - Tim Scarfe: https://www.linkedin.com/in/ecsquizor/ https://www.mlst.ai/ Original MLST show with Kenneth: https://www.youtube.com/watch?v=lhYGXYeMq_E

Tim explains the book more here:

https://www.youtube.com/watch?v=wNhaz81OOqw

Dr. Brandon Rohrer - Robotics, Creativity and Intelligence

Brandon Rohrer who obtained his Ph.D from MIT is driven by understanding algorithms ALL the way down to their nuts and bolts, so he can make them accessible to everyone by first explaining them in the way HE himself would have wanted to learn!

Please support us on Patreon for loads of exclusive content and private Discord:

https://patreon.com/mlst (public discord)

https://discord.gg/aNPkGUQtc5

https://twitter.com/MLStreetTalk

Brandon Rohrer is a seasoned data science leader and educator with a rich background in creating robust, efficient machine learning algorithms and tools. With a Ph.D. in Mechanical Engineering from MIT, his expertise encompasses a broad spectrum of AI applications — from computer vision and natural language processing to reinforcement learning and robotics. Brandon's career has seen him in Principle-level roles at Microsoft and Facebook. An educator at heart, he also shares his knowledge through detailed tutorials, courses, and his forthcoming book, "How to Train Your Robot."

YT version: https://www.youtube.com/watch?v=4Ps7ahonRCY

Brandon's links:

https://github.com/brohrer

https://www.youtube.com/channel/UCsBKTrp45lTfHa_p49I2AEQ

https://www.linkedin.com/in/brohrer/

How transformers work:

https://e2eml.school/transformers

Brandon's End-to-End Machine Learning school courses, posts, and tutorials

https://e2eml.school

Free course:

https://end-to-end-machine-learning.teachable.com/p/complete-course-library-full-end-to-end-machine-learning-catalog

Blog: https://e2eml.school/blog.html

Ziptie: Learning Useful Features [Brandon Rohrer]

https://www.brandonrohrer.com/ziptie

TOC should be baked into the MP3 file now

00:00:00 - Intro to Brandon

00:00:36 - RLHF

00:01:09 - Limitations of transformers

00:07:23 - Agency - we are all GPTs

00:09:07 - BPE / representation bias

00:12:00 - LLM true believers

00:16:42 - Brandon's style of teaching

00:19:50 - ML vs real world = Robotics

00:29:59 - Reward shaping

00:37:08 - No true Scotsman - when do we accept capabilities as real

00:38:50 - Externalism

00:43:03 - Building flexible robots

00:45:37 - Is reward enough

00:54:30 - Optimization curse

00:58:15 - Collective intelligence

01:01:51 - Intelligence + creativity

01:13:35 - ChatGPT + Creativity

01:25:19 - Transformers Tutorial

Showdown Between e/acc Leader And Doomer - Connor Leahy + Beff Jezos

The world's second-most famous AI doomer Connor Leahy sits down with Beff Jezos, the founder of the e/acc movement debating technology, AI policy, and human values. As the two discuss technology, AI safety, civilization advancement, and the future of institutions, they clash on their opposing perspectives on how we steer humanity towards a more optimal path.

Watch behind the scenes, get early access and join the private Discord by supporting us on Patreon. We have some amazing content going up there with Max Bennett and Kenneth Stanley this week! https://patreon.com/mlst (public discord) https://discord.gg/aNPkGUQtc5 https://twitter.com/MLStreetTalk

Post-interview with Beff and Connor: https://www.patreon.com/posts/97905213

Pre-interview with Connor and his colleague Dan Clothiaux: https://www.patreon.com/posts/connor-leahy-and-97631416

Leahy, known for his critical perspectives on AI and technology, challenges Jezos on a variety of assertions related to the accelerationist movement, market dynamics, and the need for regulation in the face of rapid technological advancements. Jezos, on the other hand, provides insights into the e/acc movement's core philosophies, emphasizing growth, adaptability, and the dangers of over-legislation and centralized control in current institutions.

Throughout the discussion, both speakers explore the concept of entropy, the role of competition in fostering innovation, and the balance needed to mediate order and chaos to ensure the prosperity and survival of civilization. They weigh up the risks and rewards of AI, the importance of maintaining a power equilibrium in society, and the significance of cultural and institutional dynamism.

Beff Jezos (Guillaume Verdon): https://twitter.com/BasedBeffJezos https://twitter.com/GillVerd Connor Leahy: https://twitter.com/npcollapse

YT: https://www.youtube.com/watch?v=0zxi0xSBOaQ

TOC:

00:00:00 - Intro

00:03:05 - Society library reference

00:03:35 - Debate starts

00:05:08 - Should any tech be banned?

00:20:39 - Leaded Gasoline

00:28:57 - False vacuum collapse method?

00:34:56 - What if there are dangerous aliens?

00:36:56 - Risk tolerances

00:39:26 - Optimizing for growth vs value

00:52:38 - Is vs ought

01:02:29 - AI discussion

01:07:38 - War / global competition

01:11:02 - Open source F16 designs

01:20:37 - Offense vs defense

01:28:49 - Morality / value

01:43:34 - What would Conor do

01:50:36 - Institutions/regulation

02:26:41 - Competition vs. Regulation Dilemma

02:32:50 - Existential Risks and Future Planning

02:41:46 - Conclusion and Reflection

Note from Tim: I baked the chapter metadata into the mp3 file this time, does that help the chapters show up in your app? Let me know. Also I accidentally exported a few minutes of dead audio at the end of the file - sorry about that just skip on when the episode finishes.

Mahault Albarracin - Cognitive Science

Watch behind the scenes, get early access and join the private Discord by supporting us on Patreon:

https://patreon.com/mlst (public discord)

https://discord.gg/aNPkGUQtc5

https://twitter.com/MLStreetTalk

YT version: https://youtu.be/n8G50ynU0Vg

In this interview on MLST, Dr. Tim Scarfe interviews Mahault Albarracin, who is the director of product for R&D at VERSES and also a PhD student in cognitive computing at the University of Quebec in Montreal. They discuss a range of topics related to consciousness, cognition, and machine learning.

Throughout the conversation, they touch upon various philosophical and computational concepts such as panpsychism, computationalism, and materiality. They consider the "hard problem" of consciousness, which is the question of how and why we have subjective experiences.

Albarracin shares her views on the controversial Integrated Information Theory and the open letter of opposition it received from the scientific community. She reflects on the nature of scientific critique and rivalry, advising caution in declaring entire fields of study as pseudoscientific.

A substantial part of the discussion is dedicated to the topic of science itself, where Albarracin talks about thresholds between legitimate science and pseudoscience, the role of evidence, and the importance of validating scientific methods and claims.

They touch upon language models, discussing whether they can be considered as having a "theory of mind" and the implications of assigning such properties to AI systems. Albarracin challenges the idea that there is a pure form of intelligence independent of material constraints and emphasizes the role of sociality in the development of our cognitive abilities.

Albarracin offers her thoughts on scientific endeavors, the predictability of systems, the nature of intelligence, and the processes of learning and adaptation. She gives insights into the concept of using degeneracy as a way to increase resilience within systems and the role of maintaining a degree of redundancy or extra capacity as a buffer against unforeseen events.

The conversation concludes with her discussing the potential benefits of collective intelligence, likening the adaptability and resilience of interconnected agent systems to those found in natural ecosystems.

https://www.linkedin.com/in/mahault-albarracin-1742bb153/

00:00:00 - Intro / IIT scandal

00:05:54 - Gaydar paper / What makes good science

00:10:51 - Language

00:18:16 - Intelligence

00:29:06 - X-risk

00:40:49 - Self modelling

00:43:56 - Anthropomorphisation

00:46:41 - Mediation and subjectivity

00:51:03 - Understanding

00:56:33 - Resiliency

Technical topics:

1. Integrated Information Theory (IIT) - Giulio Tononi

2. The "hard problem" of consciousness - David Chalmers

3. Panpsychism and Computationalism in philosophy of mind

4. Active Inference Framework - Karl Friston

5. Theory of Mind and its computation in AI systems

6. Noam Chomsky's views on language models and linguistics

7. Daniel Dennett's Intentional Stance theory

8. Collective intelligence and system resilience

9. Redundancy and degeneracy in complex systems

10. Michael Levin's research on bioelectricity and pattern formation

11. The role of phenomenology in cognitive science

$450M AI Startup In 3 Years | Chai AI

Chai AI is the leading platform for conversational chat artificial intelligence.

Note: this is a sponsored episode of MLST.

William Beauchamp is the founder of two $100M+ companies - Chai Research, an AI startup, and Seamless Capital, a hedge fund based in Cambridge, UK. Chaiverse is the Chai AI developer platform, where developers can train, submit and evaluate on millions of real users to win their share of $1,000,000. https://www.chai-research.com https://www.chaiverse.com https://twitter.com/chai_research https://facebook.com/chairesearch/ https://www.instagram.com/chairesearch/ Download the app on iOS and Android (https://onelink.to/kqzhy9 ) #chai #chai_ai #chai_research #chaiverse #generative_ai #LLMs

DOES AI HAVE AGENCY? With Professor. Karl Friston and Riddhi J. Pitliya

Watch behind the scenes, get early access and join the private Discord by supporting us on Patreon:

https://patreon.com/mlst (public discord)

https://discord.gg/aNPkGUQtc5

https://twitter.com/MLStreetTalk

DOES AI HAVE AGENCY? With Professor. Karl Friston and Riddhi J. Pitliya

Agency in the context of cognitive science, particularly when considering the free energy principle, extends beyond just human decision-making and autonomy. It encompasses a broader understanding of how all living systems, including non-human entities, interact with their environment to maintain their existence by minimising sensory surprise.

According to the free energy principle, living organisms strive to minimize the difference between their predicted states and the actual sensory inputs they receive. This principle suggests that agency arises as a natural consequence of this process, particularly when organisms appear to plan ahead many steps in the future.

Riddhi J. Pitliya is based in the computational psychopathology lab doing her Ph.D at the University of Oxford and works with Professor Karl Friston at VERSES.

https://twitter.com/RiddhiJP

References:

THE FREE ENERGY PRINCIPLE—A PRECIS [Ramstead]

https://www.dialecticalsystems.eu/contributions/the-free-energy-principle-a-precis/

Active Inference: The Free Energy Principle in Mind, Brain, and Behavior [Thomas Parr, Giovanni Pezzulo, Karl J. Friston]

https://direct.mit.edu/books/oa-monograph/5299/Active-InferenceThe-Free-Energy-Principle-in-Mind

The beauty of collective intelligence, explained by a developmental biologist | Michael Levin

https://www.youtube.com/watch?v=U93x9AWeuOA

Growing Neural Cellular Automata

https://distill.pub/2020/growing-ca

Carcinisation

https://en.wikipedia.org/wiki/Carcinisation

Prof. KENNETH STANLEY - Why Greatness Cannot Be Planned

https://www.youtube.com/watch?v=lhYGXYeMq_E

On Defining Artificial Intelligence [Pei Wang]

https://sciendo.com/article/10.2478/jagi-2019-0002

Why? The Purpose of the Universe [Goff]

https://amzn.to/4aEqpfm

Umwelt

https://en.wikipedia.org/wiki/Umwelt

An Immense World: How Animal Senses Reveal the Hidden Realms [Yong]

https://amzn.to/3tzzTb7

What's it like to be a bat [Nagal]

https://www.sas.upenn.edu/~cavitch/pdf-library/Nagel_Bat.pdf

COUNTERFEIT PEOPLE. DANIEL DENNETT. (SPECIAL EDITION)

https://www.youtube.com/watch?v=axJtywd9Tbo

We live in the infosphere [FLORIDI]

https://www.youtube.com/watch?v=YLNGvvgq3eg

Mark Zuckerberg: First Interview in the Metaverse | Lex Fridman Podcast #398

https://www.youtube.com/watch?v=MVYrJJNdrEg

Black Mirror: Rachel, Jack and Ashley Too | Official Trailer | Netflix

https://www.youtube.com/watch?v=-qIlCo9yqpY

![Understanding Deep Learning - Prof. SIMON PRINCE [STAFF FAVOURITE]](https://d3t3ozftmdmh3i.cloudfront.net/staging/podcast_uploaded_episode400/4981699/4981699-1703622722420-21597385628ab.jpg)

Understanding Deep Learning - Prof. SIMON PRINCE [STAFF FAVOURITE]

Watch behind the scenes, get early access and join private Discord by supporting us on Patreon: https://patreon.com/mlst

https://discord.gg/aNPkGUQtc5

https://twitter.com/MLStreetTalk

In this comprehensive exploration of the field of deep learning with Professor Simon Prince who has just authored an entire text book on Deep Learning, we investigate the technical underpinnings that contribute to the field's unexpected success and confront the enduring conundrums that still perplex AI researchers.

Key points discussed include the surprising efficiency of deep learning models, where high-dimensional loss functions are optimized in ways which defy traditional statistical expectations. Professor Prince provides an exposition on the choice of activation functions, architecture design considerations, and overparameterization. We scrutinize the generalization capabilities of neural networks, addressing the seeming paradox of well-performing overparameterized models. Professor Prince challenges popular misconceptions, shedding light on the manifold hypothesis and the role of data geometry in informing the training process. Professor Prince speaks about how layers within neural networks collaborate, recursively reconfiguring instance representations that contribute to both the stability of learning and the emergence of hierarchical feature representations. In addition to the primary discussion on technical elements and learning dynamics, the conversation briefly diverts to audit the implications of AI advancements with ethical concerns.

Follow Prof. Prince:

https://twitter.com/SimonPrinceAI

https://www.linkedin.com/in/simon-prince-615bb9165/

Get the book now!

https://mitpress.mit.edu/9780262048644/understanding-deep-learning/

https://udlbook.github.io/udlbook/

Panel: Dr. Tim Scarfe -

https://www.linkedin.com/in/ecsquizor/

https://twitter.com/ecsquendor

TOC:

[00:00:00] Introduction

[00:11:03] General Book Discussion

[00:15:30] The Neural Metaphor

[00:17:56] Back to Book Discussion

[00:18:33] Emergence and the Mind

[00:29:10] Computation in Transformers

[00:31:12] Studio Interview with Prof. Simon Prince

[00:31:46] Why Deep Neural Networks Work: Spline Theory

[00:40:29] Overparameterization in Deep Learning

[00:43:42] Inductive Priors and the Manifold Hypothesis

[00:49:31] Universal Function Approximation and Deep Networks

[00:59:25] Training vs Inference: Model Bias

[01:03:43] Model Generalization Challenges

[01:11:47] Purple Segment: Unknown Topic

[01:12:45] Visualizations in Deep Learning

[01:18:03] Deep Learning Theories Overview

[01:24:29] Tricks in Neural Networks

[01:30:37] Critiques of ChatGPT

[01:42:45] Ethical Considerations in AI

References on YT version VD: https://youtu.be/sJXn4Cl4oww

Prof. BERT DE VRIES - ON ACTIVE INFERENCE

Watch behind the scenes with Bert on Patreon: https://www.patreon.com/posts/bert-de-vries-93230722 https://discord.gg/aNPkGUQtc5 https://twitter.com/MLStreetTalk

Note, there is some mild background music on chapter 1 (Least Action), 3 (Friston) and 5 (Variational Methods) - please skip ahead if annoying. It's a tiny fraction of the overall podcast.

YT version: https://youtu.be/2wnJ6E6rQsU

Bert de Vries is Professor in the Signal Processing Systems group at Eindhoven University. His research focuses on the development of intelligent autonomous agents that learn from in-situ interactions with their environment. His research draws inspiration from diverse fields including computational neuroscience, Bayesian machine learning, Active Inference and signal processing. Bert believes that development of signal processing systems will in the future be largely automated by autonomously operating agents that learn purposeful from situated environmental interactions. Bert received nis M.Sc. (1986) and Ph.D. (1991) degrees in Electrical Engineering from Eindhoven University of Technology (TU/e) and the University of Florida, respectively. From 1992 to 1999, he worked as a research scientist at Sarnoff Research Center in Princeton (NJ, USA). Since 1999, he has been employed in the hearing aids industry, both in engineering and managerial positions. De Vries was appointed part-time professor in the Signal Processing Systems Group at TU/e in 2012. Contact: https://twitter.com/bertdv0 https://www.tue.nl/en/research/researchers/bert-de-vries https://www.verses.ai/about-us Panel: Dr. Tim Scarfe / Dr. Keith Duggar TOC: [00:00:00] Principle of Least Action [00:05:10] Patreon Teaser [00:05:46] On Friston [00:07:34] Capm Peterson (VERSES) [00:08:20] Variational Methods [00:16:13] Dan Mapes (VERSES) [00:17:12] Engineering with Active Inference [00:20:23] Jason Fox (VERSES) [00:20:51] Riddhi Jain Pitliya [00:21:49] Hearing Aids as Adaptive Agents [00:33:38] Steven Swanson (VERSES) [00:35:46] Main Interview Kick Off, Engineering and Active Inference [00:43:35] Actor / Streaming / Message Passing [00:56:21] Do Agents Lose Flexibility with Maturity? [01:00:50] Language Compression [01:04:37] Marginalisation to Abstraction [01:12:45] Online Structural Learning [01:18:40] Efficiency in Active Inference [01:26:25] SEs become Neuroscientists [01:35:11] Building an Automated Engineer [01:38:58] Robustness and Design vs Grow [01:42:38] RXInfer [01:51:12] Resistance to Active Inference? [01:57:39] Diffusion of Responsibility in a System [02:10:33] Chauvinism in "Understanding" [02:20:08] On Becoming a Bayesian Refs: RXInfer https://biaslab.github.io/rxinfer-website/ Prof. Ariel Caticha https://www.albany.edu/physics/faculty/ariel-caticha Pattern recognition and machine learning (Bishop) https://www.microsoft.com/en-us/research/uploads/prod/2006/01/Bishop-Pattern-Recognition-and-Machine-Learning-2006.pdf Data Analysis: A Bayesian Tutorial (Sivia) https://www.amazon.co.uk/Data-Analysis-Bayesian-Devinderjit-Sivia/dp/0198568320 Probability Theory: The Logic of Science (E. T. Jaynes) https://www.amazon.co.uk/Probability-Theory-Principles-Elementary-Applications/dp/0521592712/ #activeinference #artificialintelligence

MULTI AGENT LEARNING - LANCELOT DA COSTA

Please support us https://www.patreon.com/mlst

https://discord.gg/aNPkGUQtc5

https://twitter.com/MLStreetTalk

Lance Da Costa aims to advance our understanding of intelligent systems by modelling cognitive systems and improving artificial systems.

He's a PhD candidate with Greg Pavliotis and Karl Friston jointly at Imperial College London and UCL, and a student in the Mathematics of Random Systems CDT run by Imperial College London and the University of Oxford. He completed an MRes in Brain Sciences at UCL with Karl Friston and Biswa Sengupta, an MASt in Pure Mathematics at the University of Cambridge with Oscar Randal-Williams, and a BSc in Mathematics at EPFL and the University of Toronto.

Summary:

Lance did pure math originally but became interested in the brain and AI. He started working with Karl Friston on the free energy principle, which claims all intelligent agents minimize free energy for perception, action, and decision-making. Lance has worked to provide mathematical foundations and proofs for why the free energy principle is true, starting from basic assumptions about agents interacting with their environment. This aims to justify the principle from first physics principles. Dr. Scarfe and Da Costa discuss different approaches to AI - the free energy/active inference approach focused on mimicking human intelligence vs approaches focused on maximizing capability like deep reinforcement learning. Lance argues active inference provides advantages for explainability and safety compared to black box AI systems. It provides a simple, sparse description of intelligence based on a generative model and free energy minimization. They discuss the need for structured learning and acquiring core knowledge to achieve more human-like intelligence. Lance highlights work from Josh Tenenbaum's lab that shows similar learning trajectories to humans in a simple Atari-like environment.

Incorporating core knowledge constraints the space of possible generative models the agent can use to represent the world, making learning more sample efficient. Lance argues active inference agents with core knowledge can match human learning capabilities.

They discuss how to make generative models interpretable, such as through factor graphs. The goal is to be able to understand the representations and message passing in the model that leads to decisions.

In summary, Lance argues active inference provides a principled approach to AI with advantages for explainability, safety, and human-like learning. Combining it with core knowledge and structural learning aims to achieve more human-like artificial intelligence.

https://www.lancelotdacosta.com/

https://twitter.com/lancelotdacosta

Interviewer: Dr. Tim Scarfe

TOC

00:00:00 - Start

00:09:27 - Intelligence

00:12:37 - Priors / structure learning

00:17:21 - Core knowledge

00:29:05 - Intelligence is specialised

00:33:21 - The magic of agents

00:39:30 - Intelligibility of structure learning

#artificialintelligence #activeinference

![THE HARD PROBLEM OF OBSERVERS - WOLFRAM & FRISTON [SPECIAL EDITION]](https://d3t3ozftmdmh3i.cloudfront.net/staging/podcast_uploaded_episode400/4981699/4981699-1698618974465-07f8f56402934.jpg)

THE HARD PROBLEM OF OBSERVERS - WOLFRAM & FRISTON [SPECIAL EDITION]

Please support us! https://www.patreon.com/mlst https://discord.gg/aNPkGUQtc5 https://twitter.com/MLStreetTalk

YT version (with intro not found here) https://youtu.be/6iaT-0Dvhnc This is the epic special edition show you have been waiting for! With two of the most brilliant scientists alive today. Atoms, things, agents, ... observers. What even defines an "observer" and what properties must all observers share? How do objects persist in our universe given that their material composition changes over time? What does it mean for a thing to be a thing? And do things supervene on our lower-level physical reality? What does it mean for a thing to have agency? What's the difference between a complex dynamical system with and without agency? Could a rock or an AI catflap have agency? Can the universe be factorised into distinct agents, or is agency diffused? Have you ever pondered about these deep questions about reality? Prof. Friston and Dr. Wolfram have spent their entire careers, some 40+ years each thinking long and hard about these very questions and have developed significant frameworks of reference on their respective journeys (the Wolfram Physics project and the Free Energy principle).

Panel: MIT Ph.D Keith Duggar Production: Dr. Tim Scarfe Refs: TED Talk with Stephen: https://www.ted.com/talks/stephen_wolfram_how_to_think_computationally_about_ai_the_universe_and_everything https://writings.stephenwolfram.com/2023/10/how-to-think-computationally-about-ai-the-universe-and-everything/ TOC 00:00:00 - Show kickoff

00:02:38 - Wolfram gets to grips with FEP

00:27:08 - How much control does an agent/observer have

00:34:52 - Observer persistence, what universe seems like to us

00:40:31 - Black holes

00:45:07 - Inside vs outside

00:52:20 - Moving away from the predictable path

00:55:26 - What can observers do

01:06:50 - Self modelling gives agency

01:11:26 - How do you know a thing has agency?

01:22:48 - Deep link between dynamics, ruliad and AI

01:25:52 - Does agency entail free will? Defining Agency

01:32:57 - Where do I probe for agency?

01:39:13 - Why is the universe the way we see it?

01:42:50 - Alien intelligence

01:43:40 - The hard problem of Observers

01:46:20 - Summary thoughts from Wolfram

01:49:35 - Factorisability of FEP

01:57:05 - Patreon interview teaser

DR. JEFF BECK - THE BAYESIAN BRAIN

Support us! https://www.patreon.com/mlst

MLST Discord: https://discord.gg/aNPkGUQtc5

YT version: https://www.youtube.com/watch?v=c4praCiy9qU

Dr. Jeff Beck is a computational neuroscientist studying probabilistic reasoning (decision making under uncertainty) in humans and animals with emphasis on neural representations of uncertainty and cortical implementations of probabilistic inference and learning. His line of research incorporates information theoretic and hierarchical statistical analysis of neural and behavioural data as well as reinforcement learning and active inference.

https://www.linkedin.com/in/jeff-beck...

https://scholar.google.com/citations?...

Interviewer: Dr. Tim Scarfe

TOC

00:00:00 Intro

00:00:51 Bayesian / Knowledge

00:14:57 Active inference

00:18:58 Mediation

00:23:44 Philosophy of mind / science

00:29:25 Optimisation

00:42:54 Emergence

00:56:38 Steering emergent systems

01:04:31 Work plan

01:06:06 Representations/Core knowledge

#activeinference

Prof. Melanie Mitchell 2.0 - AI Benchmarks are Broken!

Patreon: https://www.patreon.com/mlst Discord: https://discord.gg/ESrGqhf5CB Prof. Melanie Mitchell argues that the concept of "understanding" in AI is ill-defined and multidimensional - we can't simply say an AI system does or doesn't understand. She advocates for rigorously testing AI systems' capabilities using proper experimental methods from cognitive science. Popular benchmarks for intelligence often rely on the assumption that if a human can perform a task, an AI that performs the task must have human-like general intelligence. But benchmarks should evolve as capabilities improve. Large language models show surprising skill on many human tasks but lack common sense and fail at simple things young children can do. Their knowledge comes from statistical relationships in text, not grounded concepts about the world. We don't know if their internal representations actually align with human-like concepts. More granular testing focused on generalization is needed. There are open questions around whether large models' abilities constitute a fundamentally different non-human form of intelligence based on vast statistical correlations across text. Mitchell argues intelligence is situated, domain-specific and grounded in physical experience and evolution. The brain computes but in a specialized way honed by evolution for controlling the body. Extracting "pure" intelligence may not work. Other key points: - Need more focus on proper experimental method in AI research. Developmental psychology offers examples for rigorous testing of cognition. - Reporting instance-level failures rather than just aggregate accuracy can provide insights. - Scaling laws and complex systems science are an interesting area of complexity theory, with applications to understanding cities. - Concepts like "understanding" and "intelligence" in AI force refinement of fuzzy definitions. - Human intelligence may be more collective and social than we realize. AI forces us to rethink concepts we apply anthropomorphically. The overall emphasis is on rigorously building the science of machine cognition through proper experimentation and benchmarking as we assess emerging capabilities. TOC: [00:00:00] Introduction and Munk AI Risk Debate Highlights [05:00:00] Douglas Hofstadter on AI Risk [00:06:56] The Complexity of Defining Intelligence [00:11:20] Examining Understanding in AI Models [00:16:48] Melanie's Insights on AI Understanding Debate [00:22:23] Unveiling the Concept Arc [00:27:57] AI Goals: A Human vs Machine Perspective [00:31:10] Addressing the Extrapolation Challenge in AI [00:36:05] Brain Computation: The Human-AI Parallel [00:38:20] The Arc Challenge: Implications and Insights [00:43:20] The Need for Detailed AI Performance Reporting [00:44:31] Exploring Scaling in Complexity Theory Eratta: Note Tim said around 39 mins that a recent Stanford/DM paper modelling ARC “on GPT-4 got around 60%”. This is not correct and he misremembered. It was actually davinci3, and around 10%, which is still extremely good for a blank slate approach with an LLM and no ARC specific knowledge. Folks on our forum couldn’t reproduce the result. See paper linked below. Books (MUST READ): Artificial Intelligence: A Guide for Thinking Humans (Melanie Mitchell) https://www.amazon.co.uk/Artificial-Intelligence-Guide-Thinking-Humans/dp/B07YBHNM1C/?&_encoding=UTF8&tag=mlst00-21&linkCode=ur2&linkId=44ccac78973f47e59d745e94967c0f30&camp=1634&creative=6738 Complexity: A Guided Tour (Melanie Mitchell) https://www.amazon.co.uk/Audible-Complexity-A-Guided-Tour?&_encoding=UTF8&tag=mlst00-21&linkCode=ur2&linkId=3f8bd505d86865c50c02dd7f10b27c05&camp=1634&creative=6738

Show notes (transcript, full references etc)

https://atlantic-papyrus-d68.notion.site/Melanie-Mitchell-2-0-15e212560e8e445d8b0131712bad3000?pvs=25

YT version: https://youtu.be/29gkDpR2orc

Autopoitic Enactivism and the Free Energy Principle - Prof. Friston, Prof Buckley, Dr. Ramstead

We explore connections between FEP and enactivism, including tensions raised in a paper critiquing FEP from an enactivist perspective.

Dr. Maxwell Ramstead provides background on enactivism emerging from autopoiesis, with a focus on embodied cognition and rejecting information processing/computational views of mind.

Chris shares his journey from robotics into FEP, starting as a skeptic but becoming convinced it's the right framework. He notes there are both "high road" and "low road" versions, ranging from embodied to more radically anti-representational stances. He doesn't see a definitive fork between dynamical systems and information theory as the source of conflict. Rather, the notion of operational closure in enactivism seems to be the main sticking point.

The group explores definitional issues around structure/organization, boundaries, and operational closure. Maxwell argues the generative model in FEP captures organizational dependencies akin to operational closure. The Markov blanket formalism models structural interfaces.

We discuss the concept of goals in cognitive systems - Chris advocates an intentional stance perspective - using notions of goals/intentions if they help explain system dynamics. Goals emerge from beliefs about dynamical trajectories. Prof Friston provides an elegant explanation of how goal-directed behavior naturally falls out of the FEP mathematics in a particular "goldilocks" regime of system scale/dynamics. The conversation explores the idea that many systems simply act "as if" they have goals or models, without necessarily possessing explicit representations. This helps resolve tensions between enactivist and computational perspectives.

Throughout the dialogue, Maxwell presses philosophical points about the FEP abolishing what he perceives as false dichotomies in cognitive science such as internalism/externalism. He is critical of enactivists' commitment to bright line divides between subject areas.

Prof. Karl Friston - Inventor of the free energy principle https://scholar.google.com/citations?user=q_4u0aoAAAAJ

Prof. Chris Buckley - Professor of Neural Computation at Sussex University https://scholar.google.co.uk/citations?user=nWuZ0XcAAAAJ&hl=en

Dr. Maxwell Ramstead - Director of Research at VERSES https://scholar.google.ca/citations?user=ILpGOMkAAAAJ&hl=fr

We address critique in this paper:

Laying down a forking path: Tensions between enaction and the free energy principle (Ezequiel A. Di Paolo, Evan Thompson, Randall D. Beere)

https://philosophymindscience.org/index.php/phimisci/article/download/9187/8975

Other refs:

Multiscale integration: beyond internalism and externalism (Maxwell J D Ramstead)

https://pubmed.ncbi.nlm.nih.gov/33627890/

MLST panel: Dr. Tim Scarfe and Dr. Keith Duggar

TOC (auto generated): 0:00 - Introduction 0:41 - Defining enactivism and its variants 6:58 - The source of the conflict between dynamical systems and information theory 8:56 - Operational closure in enactivism 10:03 - Goals and intentions 12:35 - The link between dynamical systems and information theory 15:02 - Path integrals and non-equilibrium dynamics 18:38 - Operational closure defined 21:52 - Structure vs. organization in enactivism 24:24 - Markov blankets as interfaces 28:48 - Operational closure in FEP 30:28 - Structure and organization again 31:08 - Dynamics vs. information theory 33:55 - Goals and intentions emerge in the FEP mathematics 36:58 - The Good Regulator Theorem 49:30 - enactivism and its relation to ecological psychology 52:00 - Goals, intentions and beliefs 55:21 - Boundaries and meaning 58:55 - Enactivism's rejection of information theory 1:02:08 - Beliefs vs goals 1:05:06 - Ecological psychology and FEP 1:08:41 - The Good Regulator Theorem 1:18:38 - How goal-directed behavior emerges 1:23:13 - Ontological vs metaphysical boundaries 1:25:20 - Boundaries as maps 1:31:08 - Connections to the maximum entropy principle 1:33:45 - Relations to quantum and relational physics

STEPHEN WOLFRAM 2.0 - Resolving the Mystery of the Second Law of Thermodynamics

Please check out Numerai - our sponsor @ http://numer.ai/mlst Patreon: https://www.patreon.com/mlst Discord: https://discord.gg/ESrGqhf5CB The Second Law: Resolving the Mystery of the Second Law of Thermodynamics Buy Stephen's book here - https://tinyurl.com/2jj2t9wa The Language Game: How Improvisation Created Language and Changed the World by Morten H. Christiansen and Nick Chater Buy here: https://tinyurl.com/35bvs8be Stephen Wolfram starts by discussing the second law of thermodynamics - the idea that entropy, or disorder, tends to increase over time. He talks about how this law seems intuitively true, but has been difficult to prove. Wolfram outlines his decades-long quest to fully understand the second law, including failed early attempts to simulate particles mixing as a 12-year-old. He explains how irreversibility arises from the computational irreducibility of underlying physical processes coupled with our limited ability as observers to do the computations needed to "decrypt" the microscopic details. The conversation then shifts to discussing language and how concepts allow us to communicate shared ideas between minds positioned in different parts of "rule space." Wolfram talks about the successes and limitations of using large language models to generate Wolfram Language code from natural language prompts. He sees it as a useful tool for getting started programming, but one still needs human refinement. The final part of the conversation focuses on AI safety and governance. Wolfram notes uncontrolled actuation is where things can go wrong with AI systems. He discusses whether AI agents could have intrinsic experiences and goals, how we might build trust networks between AIs, and that managing a system of many AIs may be easier than a single AI. Wolfram emphasizes the need for more philosophical depth in thinking about AI aims, and draws connections between potential solutions and his work on computational irreducibility and physics. Show notes: https://docs.google.com/document/d/1hXNHtvv8KDR7PxCfMh9xOiDFhU3SVDW8ijyxeTq9LHo/edit?usp=sharing Pod version: TBA https://twitter.com/stephen_wolfram TOC: 00:00:00 - Introduction 00:02:34 - Second law book 00:14:01 - Reversibility / entropy / observers / equivalence 00:34:22 - Concepts/language in the ruliad 00:49:04 - Comparison to free energy principle 00:53:58 - ChatGPT / Wolfram / Language 01:00:17 - AI risk Panel: Dr. Tim Scarfe @ecsquendor / Dr. Keith Duggar @DoctorDuggar

Prof. Jürgen Schmidhuber - FATHER OF AI ON ITS DANGERS

Please check out Numerai - our sponsor @ http://numer.ai/mlst Patreon: https://www.patreon.com/mlst Discord: https://discord.gg/ESrGqhf5CB Professor Jürgen Schmidhuber, the father of artificial intelligence, joins us today. Schmidhuber discussed the history of machine learning, the current state of AI, and his career researching recursive self-improvement, artificial general intelligence and its risks. Schmidhuber pointed out the importance of studying the history of machine learning to properly assign credit for key breakthroughs. He discussed some of the earliest machine learning algorithms. He also highlighted the foundational work of Leibniz, who discovered the chain rule that enables training of deep neural networks, and the ancient Antikythera mechanism, the first known gear-based computer. Schmidhuber discussed limits to recursive self-improvement and artificial general intelligence, including physical constraints like the speed of light and what can be computed. He noted we have no evidence the human brain can do more than traditional computing. Schmidhuber sees humankind as a potential stepping stone to more advanced, spacefaring machine life which may have little interest in humanity. However, he believes commercial incentives point AGI development towards being beneficial and that open-source innovation can help to achieve "AI for all" symbolised by his company's motto "AI∀". Schmidhuber discussed approaches he believes will lead to more general AI, including meta-learning, reinforcement learning, building predictive world models, and curiosity-driven learning. His "fast weight programming" approach from the 1990s involved one network altering another network's connections. This was actually the first Transformer variant, now called an unnormalised linear Transformer. He also described the first GANs in 1990, to implement artificial curiosity. Schmidhuber reflected on his career researching AI. He said his fondest memories were gaining insights that seemed to solve longstanding problems, though new challenges always arose: "then for a brief moment it looks like the greatest thing since sliced bread and and then you get excited ... but then suddenly you realize, oh, it's still not finished. Something important is missing.” Since 1985 he has worked on systems that can recursively improve themselves, constrained only by the limits of physics and computability. He believes continual progress, shaped by both competition and collaboration, will lead to increasingly advanced AI. On AI Risk: Schmidhuber: "To me it's indeed weird. Now there are all these letters coming out warning of the dangers of AI. And I think some of the guys who are writing these letters, they are just seeking attention because they know that AI dystopia are attracting more attention than documentaries about the benefits of AI in healthcare." Schmidhuber believes we should be more concerned with existing threats like nuclear weapons than speculative risks from advanced AI. He said: "As far as I can judge, all of this cannot be stopped but it can be channeled in a very natural way that is good for humankind...there is a tremendous bias towards good AI, meaning AI that is good for humans...I am much more worried about 60 year old technology that can wipe out civilization within two hours, without any AI.”

[this is truncated, read show notes]

YT: https://youtu.be/q27XMPm5wg8

Show notes: https://docs.google.com/document/d/13-vIetOvhceZq5XZnELRbaazpQbxLbf5Yi7M25CixEE/edit?usp=sharing Note: Interview was recorded 15th June 2023. https://twitter.com/SchmidhuberAI Panel: Dr. Tim Scarfe @ecsquendor / Dr. Keith Duggar @DoctorDuggar Pod version: TBA TOC: [00:00:00] Intro / Numerai [00:00:51] Show Kick Off [00:02:24] Credit Assignment in ML [00:12:51] XRisk [00:20:45] First Transformer variant of 1991 [00:47:20] Which Current Approaches are Good [00:52:42] Autonomy / Curiosity [00:58:42] GANs of 1990 [01:11:29] OpenAI, Moats, Legislation

Can We Develop Truly Beneficial AI? George Hotz and Connor Leahy

Patreon: https://www.patreon.com/mlst Discord: https://discord.gg/ESrGqhf5CB

George Hotz and Connor Leahy discuss the crucial challenge of developing beneficial AI that is aligned with human values. Hotz believes truly aligned AI is impossible, while Leahy argues it's a solvable technical challenge.

Hotz contends that AI will inevitably pursue power, but distributing AI widely would prevent any single AI from dominating. He advocates open-sourcing AI developments to democratize access. Leahy counters that alignment is necessary to ensure AIs respect human values. Without solving alignment, general AI could ignore or harm humans.

They discuss whether AI's tendency to seek power stems from optimization pressure or human-instilled goals. Leahy argues goal-seeking behavior naturally emerges while Hotz believes it reflects human values. Though agreeing on AI's potential dangers, they differ on solutions. Hotz favors accelerating AI progress and distributing capabilities while Leahy wants safeguards put in place.

While acknowledging risks like AI-enabled weapons, they debate whether broad access or restrictions better manage threats. Leahy suggests limiting dangerous knowledge, but Hotz insists openness checks government overreach. They concur that coordination and balance of power are key to navigating the AI revolution. Both eagerly anticipate seeing whose ideas prevail as AI progresses.

Transcript and notes: https://docs.google.com/document/d/1smkmBY7YqcrhejdbqJOoZHq-59LZVwu-DNdM57IgFcU/edit?usp=sharing

Note: this is not a normal episode i.e. the hosts are not part of the debate (and for the record don't agree with Connor or George).

TOC: [00:00:00] Introduction to George Hotz and Connor Leahy [00:03:10] George Hotz's Opening Statement: Intelligence and Power [00:08:50] Connor Leahy's Opening Statement: Technical Problem of Alignment and Coordination [00:15:18] George Hotz's Response: Nature of Cooperation and Individual Sovereignty [00:17:32] Discussion on individual sovereignty and defense [00:18:45] Debate on living conditions in America versus Somalia [00:21:57] Talk on the nature of freedom and the aesthetics of life [00:24:02] Discussion on the implications of coordination and conflict in politics [00:33:41] Views on the speed of AI development / hard takeoff [00:35:17] Discussion on potential dangers of AI [00:36:44] Discussion on the effectiveness of current AI [00:40:59] Exploration of potential risks in technology [00:45:01] Discussion on memetic mutation risk [00:52:36] AI alignment and exploitability [00:53:13] Superintelligent AIs and the assumption of good intentions [00:54:52] Humanity’s inconsistency and AI alignment [00:57:57] Stability of the world and the impact of superintelligent AIs [01:02:30] Personal utopia and the limitations of AI alignment [01:05:10] Proposed regulation on limiting the total number of flops [01:06:20] Having access to a powerful AI system [01:18:00] Power dynamics and coordination issues with AI [01:25:44] Humans vs AI in Optimization [01:27:05] The Impact of AI's Power Seeking Behavior [01:29:32] A Debate on the Future of AI

Dr. MAXWELL RAMSTEAD - The Physics of Survival

Patreon: https://www.patreon.com/mlst Discord: https://discord.gg/ESrGqhf5CB Join us for a fascinating discussion of the free energy principle with Dr. Maxwell Ramsted, a leading thinker exploring the intersection of math, physics, and philosophy and Director of Research at VERSES. The FEP was proposed by renowned neuroscientist Karl Friston, this principle offers a unifying theory explaining how systems maintain order and their identity. The free energy principle inverts traditional survival logic. Rather than asking what behaviors promote survival, it queries - given things exist, what must they do? The answer: minimizing free energy, or "surprise." Systems persist by constantly ensuring their internal states match anticipated states based on a model of the world. Failure to minimize surprise leads to chaos as systems dissolve into disorder. Thus, the free energy principle elucidates why lifeforms relentlessly model and predict their surroundings. It is an existential imperative counterbalancing entropy. Essentially, this principle describes the mind's pursuit of harmony between expectations and reality. Its relevance spans from cells to societies, underlying order wherever longevity is found. Our discussion explores the technical details and philosophical implications of this paradigm-shifting theory. How does it further our understanding of cognition and intelligence? What insights does it offer about the fundamental patterns and properties of existence? Can it precipitate breakthroughs in disciplines like neuroscience and artificial intelligence? Dr. Ramstead completed his Ph.D. at McGill University in Montreal, Canada in 2019, with frequent research visits to UCL in London, under the supervision of the world’s most cited neuroscientist, Professor Karl Friston (UCL).

YT version: https://youtu.be/8qb28P7ksyE https://scholar.google.ca/citations?user=ILpGOMkAAAAJ&hl=frhttps://spatialwebfoundation.org/team/maxwell-ramstead/https://www.linkedin.com/in/maxwell-ramstead-43a1991b7/https://twitter.com/mjdramstead VERSES AI: https://www.verses.ai/ Intro: Tim Scarfe (Ph.D) Interviewer: Keith Duggar (Ph.D MIT) TOC: 0:00:00 - Tim Intro 0:08:10 - Intro and philosophy 0:14:26 - Intro to Maxwell 0:18:00 - FEP 0:29:08 - Markov Blankets 0:51:15 - Verses AI / Applications of FEP 1:05:55 - Potential issues with deploying FEP 1:10:50 - Shared knowledge graphs 1:14:29 - XRisk / Ethics 1:24:57 - Strength of Verses 1:28:30 - Misconceptions about FEP, Physics vs philosophy/criticism 1:44:41 - Emergence / consciousness References: Principia Mathematica https://www.abebooks.co.uk/servlet/BookDetailsPL?bi=30567249049 Andy Clark's paper "Whatever Next? Predictive Brains, Situated Agents, and the Future of Cognitive Science" (Behavioral and Brain Sciences, 2013) https://pubmed.ncbi.nlm.nih.gov/23663408/ "Math Does Not Represent" by Erik Curiel https://www.youtube.com/watch?v=aA_T20HAzyY A free energy principle for generic quantum systems (Chris Fields et al) https://arxiv.org/pdf/2112.15242.pdf Designing explainable artificial intelligence with active inference https://arxiv.org/abs/2306.04025 Am I Self-Conscious? (Friston) https://www.frontiersin.org/articles/10.3389/fpsyg.2018.00579/full The Meta-Problem of Consciousness https://philarchive.org/archive/CHATMO-32v1 The Map-Territory Fallacy Fallacy https://arxiv.org/abs/2208.06924 A Technical Critique of Some Parts of the Free Energy Principle - Martin Biehl et al https://arxiv.org/abs/2001.06408 WEAK MARKOV BLANKETS IN HIGH-DIMENSIONAL, SPARSELY-COUPLED RANDOM DYNAMICAL SYSTEMS - DALTON A R SAKTHIVADIVEL https://arxiv.org/pdf/2207.07620.pdf

![MUNK DEBATE ON AI (COMMENTARY) [DAVID FOSTER]](https://d3t3ozftmdmh3i.cloudfront.net/staging/podcast_uploaded_episode400/4981699/4981699-1688320775869-2241d6d3f007a.jpg)

MUNK DEBATE ON AI (COMMENTARY) [DAVID FOSTER]

Patreon: https://www.patreon.com/mlst

Discord: https://discord.gg/ESrGqhf5CB

The discussion between Tim Scarfe and David Foster provided an in-depth critique of the arguments made by panelists at the Munk AI Debate on whether artificial intelligence poses an existential threat to humanity. While the panelists made thought-provoking points, Scarfe and Foster found their arguments largely speculative, lacking crucial details and evidence to support claims of an impending existential threat.

Scarfe and Foster strongly disagreed with Max Tegmark’s position that AI has an unparalleled “blast radius” that could lead to human extinction. Tegmark failed to provide a credible mechanism for how this scenario would unfold in reality. His arguments relied more on speculation about advanced future technologies than on present capabilities and trends. As Foster argued, we cannot conclude AI poses a threat based on speculation alone. Evidence is needed to ground discussions of existential risks in science rather than science fiction fantasies or doomsday scenarios.

They found Yann LeCun’s statements too broad and high-level, critiquing him for not providing sufficiently strong arguments or specifics to back his position. While LeCun aptly noted AI remains narrow in scope and far from achieving human-level intelligence, his arguments lacked crucial details on current limitations and why we should not fear superintelligence emerging in the near future. As Scarfe argued, without these details the discussion descended into “philosophy” rather than focusing on evidence and data.

Scarfe and Foster also took issue with Yoshua Bengio’s unsubstantiated speculation that machines would necessarily develop a desire for self-preservation that threatens humanity. There is no evidence today’s AI systems are developing human-like general intelligence or desires, let alone that these attributes would manifest in ways dangerous to humans. The question is not whether machines will eventually surpass human intelligence, but how and when this might realistically unfold based on present technological capabilities. Bengio’s arguments relied more on speculation about advanced future technologies than on evidence from current systems and research.

In contrast, they strongly agreed with Melanie Mitchell’s view that scenarios of malevolent or misguided superintelligence are speculation, not backed by evidence from AI as it exists today. Claims of an impending “existential threat” from AI are overblown, harmful to progress, and inspire undue fear of technology rather than consideration of its benefits. Mitchell sensibly argued discussions of risks from emerging technologies must be grounded in science and data, not speculation, if we are to make balanced policy and development decisions.

Overall, while the debate raised thought-provoking questions about advanced technologies that could eventually transform our world, none of the speakers made a credible evidence-based case that today’s AI poses an existential threat. Scarfe and Foster argued the debate failed to discuss concrete details about current capabilities and limitations of technologies like language models, which remain narrow in scope. General human-level AI is still missing many components, including physical embodiment, emotions, and the "common sense" reasoning that underlies human thinking. Claims of existential threats require extraordinary evidence to justify policy or research restrictions, not speculation. By discussing possibilities rather than probabilities grounded in evidence, the debate failed to substantively advance our thinking on risks from AI and its plausible development in the coming decades.

David's new podcast: https://podcasts.apple.com/us/podcast/the-ai-canvas/id1692538973

Generative AI book: https://www.oreilly.com/library/view/generative-deep-learning/9781098134174/

![[SPONSORED] The Digitized Self: AI, Identity and the Human Psyche (YouAi)](https://d3t3ozftmdmh3i.cloudfront.net/staging/podcast_uploaded_episode400/4981699/4981699-1688027067569-20e1067ee3b0c.jpg)

[SPONSORED] The Digitized Self: AI, Identity and the Human Psyche (YouAi)

Sponsored Episode - YouAi What if an AI truly knew you—your thoughts, values, aptitudes, and dreams? An AI that could enhance your life in profound ways by amplifying your strengths, augmenting your weaknesses, and connecting you with like-minded souls. That is the vision of YouAi. YouAi founder Dmitri Shapiro believes digitizing our inner lives could unlock tremendous benefits. But mapping the human psyche also poses deep questions. As technology mediates our self-understanding, what risks rendering our minds in bits and algorithms? Could we gain a new means of flourishing or lose something intangible? There are no easy answers, but YouAi offers a vision balanced by hard thinking. Shapiro discussed YouAi's app, which builds personalized AI assistants by learning how individuals think through interactive questions. As people share, YouAi develops a multidimensional model of their mind. Users get a tailored feed of prompts to continue engaging and teaching their AI. YouAi's vision provides a glimpse into a future that could unsettle or fulfill our hopes. As technology mediates understanding ourselves and others, will we risk losing what makes us human or find new means of flourishing? YouAI believes that together, we can build a future where our minds contain infinite potential—and their technology helps unlock it. But we must proceed thoughtfully, upholding human dignity above all else. Our minds shape who we are. And who we can become.Digitise your mind today: YouAi - https://YouAi.aiMIndStudio – https://YouAi.ai/mindstudioYouAi Mind Indexer - https://YouAi.ai/trainJoin the MLST discord and register for the YouAi event on July 13th: https://discord.gg/ESrGqhf5CB TOC: 0:00:00 - Introduction to Mind Digitization 0:09:31 - The YouAi Platform and Personal Applications 0:27:54 - The Potential of Group Alignment 0:30:28 - Applications in Human-to-Human Communication 0:35:43 - Applications in Interfacing with Digital Technology 0:43:41 - Introduction to the Project 0:44:51 - Brain digitization and mind vs. brain 0:49:55 - The Extended Mind and Neurofeedback 0:54:16 - Personalized Learning and the Future of Education 1:02:19 - Privacy and Data Security 1:14:20 - Ethical Considerations of Digitizing the Mind 1:19:49 - The Metaverse and the Future of Digital Identity 1:25:17 - Digital Immortality and Legacy 1:29:09 - The Nature of Consciousness 1:34:11 - Digitization of the Mind 1:35:06 - Potential Inequality in a Digital World 1:38:00 - The Role of Technology in Equalizing or Democratizing Society 1:40:51 - The Future of the Startup and Community Involvement

Joscha Bach and Connor Leahy on AI risk

Support us! https://www.patreon.com/mlst MLST Discord: https://discord.gg/aNPkGUQtc5 Twitter: https://twitter.com/MLStreetTalk The first 10 mins of audio from Joscha isn't great, it improves after.

Transcript and longer summary: https://docs.google.com/document/d/1TUJhlSVbrHf2vWoe6p7xL5tlTK_BGZ140QqqTudF8UI/edit?usp=sharing Dr. Joscha Bach argued that general intelligence emerges from civilization, not individuals. Given our biological constraints, humans cannot achieve a high level of general intelligence on our own. Bach believes AGI may become integrated into all parts of the world, including human minds and bodies. He thinks a future where humans and AGI harmoniously coexist is possible if we develop a shared purpose and incentive to align. However, Bach is uncertain about how AI progress will unfold or which scenarios are most likely. Bach argued that global control and regulation of AI is unrealistic. While regulation may address some concerns, it cannot stop continued progress in AI. He believes individuals determine their own values, so "human values" cannot be formally specified and aligned across humanity. For Bach, the possibility of building beneficial AGI is exciting but much work is still needed to ensure a positive outcome. Connor Leahy believes we have more control over the future than the default outcome might suggest. With sufficient time and effort, humanity could develop the technology and coordination to build a beneficial AGI. However, the default outcome likely leads to an undesirable scenario if we do not actively work to build a better future. Leahy thinks finding values and priorities most humans endorse could help align AI, even if individuals disagree on some values. Leahy argued a future where humans and AGI harmoniously coexist is ideal but will require substantial work to achieve. While regulation faces challenges, it remains worth exploring. Leahy believes limits to progress in AI exist but we are unlikely to reach them before humanity is at risk. He worries even modestly superhuman intelligence could disrupt the status quo if misaligned with human values and priorities. Overall, Bach and Leahy expressed optimism about the possibility of building beneficial AGI but believe we must address risks and challenges proactively. They agreed substantial uncertainty remains around how AI will progress and what scenarios are most plausible. But developing a shared purpose between humans and AI, improving coordination and control, and finding human values to help guide progress could all improve the odds of a beneficial outcome. With openness to new ideas and willingness to consider multiple perspectives, continued discussions like this one could help ensure the future of AI is one that benefits and inspires humanity. TOC: 00:00:00 - Introduction and Background 00:02:54 - Different Perspectives on AGI 00:13:59 - The Importance of AGI 00:23:24 - Existential Risks and the Future of Humanity 00:36:21 - Coherence and Coordination in Society 00:40:53 - Possibilities and Future of AGI 00:44:08 - Coherence and alignment 01:08:32 - The role of values in AI alignment 01:18:33 - The future of AGI and merging with AI 01:22:14 - The limits of AI alignment 01:23:06 - The scalability of intelligence 01:26:15 - Closing statements and future prospects

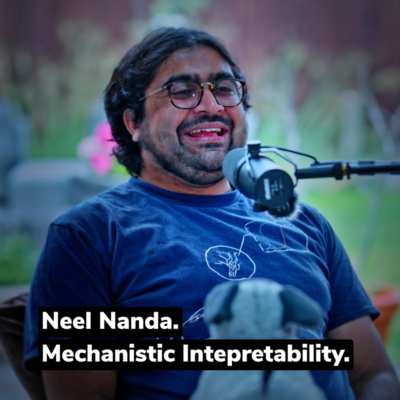

Neel Nanda - Mechanistic Interpretability

In this wide-ranging conversation, Tim Scarfe interviews Neel Nanda, a researcher at DeepMind working on mechanistic interpretability, which aims to understand the algorithms and representations learned by machine learning models. Neel discusses how models can represent their thoughts using motifs, circuits, and linear directional features which are often communicated via a "residual stream", an information highway models use to pass information between layers.

Neel argues that "superposition", the ability for models to represent more features than they have neurons, is one of the biggest open problems in interpretability. This is because superposition thwarts our ability to understand models by decomposing them into individual units of analysis. Despite this, Neel remains optimistic that ambitious interpretability is possible, citing examples like his work reverse engineering how models do modular addition. However, Neel notes we must start small, build rigorous foundations, and not assume our theoretical frameworks perfectly match reality.

The conversation turns to whether models can have goals or agency, with Neel arguing they likely can based on heuristics like models executing long term plans towards some objective. However, we currently lack techniques to build models with specific goals, meaning any goals would likely be learned or emergent. Neel highlights how induction heads, circuits models use to track long range dependencies, seem crucial for phenomena like in-context learning to emerge.

On the existential risks from AI, Neel believes we should avoid overly confident claims that models will or will not be dangerous, as we do not understand them enough to make confident theoretical assertions. However, models could pose risks through being misused, having undesirable emergent properties, or being imperfectly aligned. Neel argues we must pursue rigorous empirical work to better understand and ensure model safety, avoid "philosophizing" about definitions of intelligence, and focus on ensuring researchers have standards for what it means to decide a system is "safe" before deploying it. Overall, a thoughtful conversation on one of the most important issues of our time.

Support us! https://www.patreon.com/mlst

MLST Discord: https://discord.gg/aNPkGUQtc5

Twitter: https://twitter.com/MLStreetTalk

Neel Nanda: https://www.neelnanda.io/

TOC

[00:00:00] Introduction and Neel Nanda's Interests (walk and talk)

[00:03:15] Mechanistic Interpretability: Reverse Engineering Neural Networks

[00:13:23] Discord questions

[00:21:16] Main interview kick-off in studio

[00:49:26] Grokking and Sudden Generalization

[00:53:18] The Debate on Systematicity and Compositionality

[01:19:16] How do ML models represent their thoughts

[01:25:51] Do Large Language Models Learn World Models?

[01:53:36] Superposition and Interference in Language Models

[02:43:15] Transformers discussion

[02:49:49] Emergence and In-Context Learning

[03:20:02] Superintelligence/XRisk discussion

Transcript: https://docs.google.com/document/d/1FK1OepdJMrqpFK-_1Q3LQN6QLyLBvBwWW_5z8WrS1RI/edit?usp=sharing

Refs: https://docs.google.com/document/d/115dAroX0PzSduKr5F1V4CWggYcqIoSXYBhcxYktCnqY/edit?usp=sharing

Prof. Daniel Dennett - Could AI Counterfeit People Destroy Civilization? (SPECIAL EDITION)

Please check out Numerai - our sponsor using our link @

http://numer.ai/mlst

Numerai is a groundbreaking platform which is taking the data science world by storm. Tim has been using Numerai to build state-of-the-art models which predict the stock market, all while being a part of an inspiring community of data scientists from around the globe. They host the Numerai Data Science Tournament, where data scientists like us use their financial dataset to predict future stock market performance.

Support us! https://www.patreon.com/mlst

MLST Discord: https://discord.gg/aNPkGUQtc5

Twitter: https://twitter.com/MLStreetTalk

YT version: https://youtu.be/axJtywd9Tbo

In this fascinating interview, Dr. Tim Scarfe speaks with renowned philosopher Daniel Dennett about the potential dangers of AI and the concept of "Counterfeit People." Dennett raises concerns about AI being used to create artificial colleagues, and argues that preventing counterfeit AI individuals is crucial for societal trust and security.

They delve into Dennett's "Two Black Boxes" thought experiment, the Chinese Room Argument by John Searle, and discuss the implications of AI in terms of reversibility, reontologisation, and realism. Dr. Scarfe and Dennett also examine adversarial LLMs, mental trajectories, and the emergence of consciousness and semanticity in AI systems.

Throughout the conversation, they touch upon various philosophical perspectives, including Gilbert Ryle's Ghost in the Machine, Chomsky's work, and the importance of competition in academia. Dennett concludes by highlighting the need for legal and technological barriers to protect against the dangers of counterfeit AI creations.

Join Dr. Tim Scarfe and Daniel Dennett in this thought-provoking discussion about the future of AI and the potential challenges we face in preserving our civilization. Don't miss this insightful conversation!

TOC:

00:00:00 Intro

00:09:56 Main show kick off

00:12:04 Counterfeit People

00:16:03 Reversibility

00:20:55 Reontologisation

00:24:43 Realism

00:27:48 Adversarial LLMs are out to get us

00:32:34 Exploring mental trajectories and Chomsky

00:38:53 Gilbert Ryle and Ghost in machine and competition in academia

00:44:32 2 Black boxes thought experiment / intentional stance

01:00:11 Chinese room

01:04:49 Singularitarianism

01:07:22 Emergence of consciousness and semanticity

References:

Tree of Thoughts: Deliberate Problem Solving with Large Language Models

https://arxiv.org/abs/2305.10601

The Problem With Counterfeit People (Daniel Dennett)

https://www.theatlantic.com/technology/archive/2023/05/problem-counterfeit-people/674075/

The knowledge argument

https://en.wikipedia.org/wiki/Knowledge_argument

The Intentional Stance

https://www.researchgate.net/publication/271180035_The_Intentional_Stance

Two Black Boxes: a Fable (Daniel Dennett)

https://www.researchgate.net/publication/28762339_Two_Black_Boxes_a_Fable

The Chinese Room Argument (John Searle)

https://plato.stanford.edu/entries/chinese-room/

https://web-archive.southampton.ac.uk/cogprints.org/7150/1/10.1.1.83.5248.pdf

From Bacteria to Bach and Back: The Evolution of Minds (Daniel Dennett)

https://www.amazon.co.uk/Bacteria-Bach-Back-Evolution-Minds/dp/014197804X

Consciousness Explained (Daniel Dennett)

https://www.amazon.co.uk/Consciousness-Explained-Penguin-Science-Dennett/dp/0140128670/

The Mind's I: Fantasies and Reflections on Self and Soul (Hofstadter, Douglas R; Dennett, Daniel C.)

https://www.abebooks.co.uk/servlet/BookDetailsPL?bi=31494476184

#DanielDennett #ArtificialIntelligence #CounterfeitPeople

![Decoding the Genome: Unraveling the Complexities with AI and Creativity [Prof. Jim Hughes, Oxford]](https://d3t3ozftmdmh3i.cloudfront.net/staging/podcast_uploaded_episode400/4981699/4981699-1685574297009-5be7b03cfefa.jpg)

Decoding the Genome: Unraveling the Complexities with AI and Creativity [Prof. Jim Hughes, Oxford]